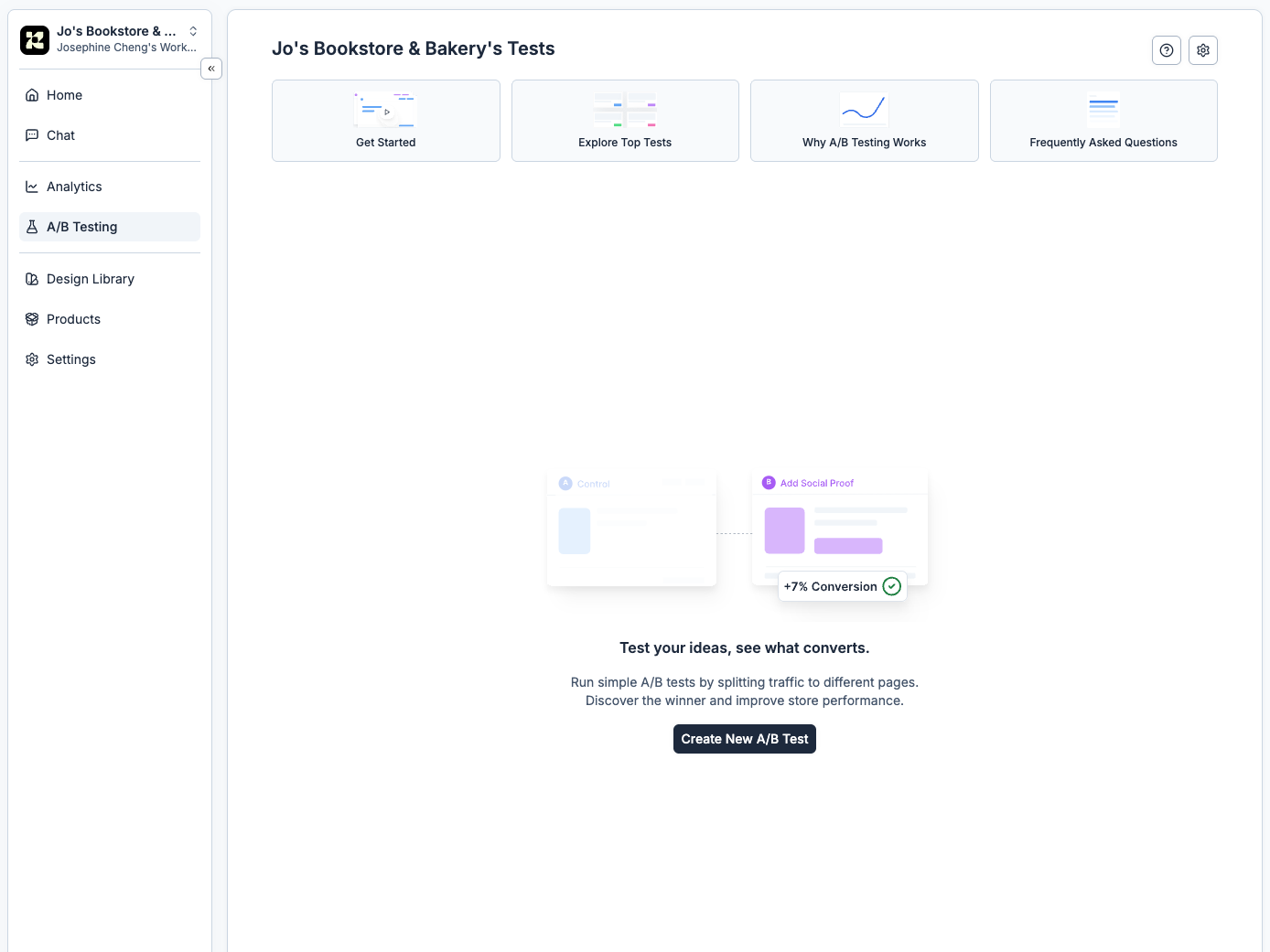

Where to A/B Test in Replo

Access the A/B testing tool from the workspace menu. Navigate to your workspace settings and select A/B Testing to create new experiments, view running tests, and analyze results. From here, you can create forever links that drive traffic to your A/B tests, set up traffic splits between control and variant pages (as many as you want), and track conversion metrics—all without leaving Replo.A/B Testing Strategy for Beginners

Start by researching your site using Google Analytics, heatmaps, session recordings, and customer surveys. Focus on high-traffic pages with poor metrics. The metrics you track depend on your goals—clickthrough rate, AOV, bounce rate, scroll depth, add-to-cart rate, and more. Most brands focus on CVR since it impacts revenue most directly. Develop strong hypotheses with measurable data observations. Include exact metric changes and predicted impact with clear reasoning. During execution, run simple A/B tests with just two versions. Test single elements to isolate impact, and commit to full 2-4 week test durations (averaging about 2 full business cycles) to ensure statistical significance. Document all learnings, including failed or inconclusive tests.Common Mistakes to Avoid

- Testing with insufficient traffic

- Running too many variations simultaneously

- Stopping tests prematurely

- Testing multiple elements at once per variant

When You Should Start A/B Testing

You’re ready when you have:- 1,000+ weekly visitors (5,000+ monthly) to pages you’ll test

- 100+ conversions per month minimum

- Google Analytics properly installed with goals configured

- 2-4 weeks of baseline data showing stable traffic patterns

- Resources to implement winning variations after testing

- Your website has major bugs or technical issues

- Traffic fluctuates wildly week-to-week

- You’re in the middle of a major store redesign

- You have fewer than 5-10 conversions weekly

If You Lack Traffic

Start with email A/B testing (needs only 500-1,000 subscribers) or PPC ad testing (5,000+ impressions) to build experimentation skills while growing site traffic. This lets you learn how A/B testing works on a smaller scale and generate ideas for larger tests when your store is ready. For example, if a certain CTA button performs well in your newsletters, note that as a future element to A/B test on your webpages.What You Should A/B Test

Here’s the order of priority we recommend:- High-Traffic, High-Impact Pages First

- Specific Elements to Test

Start with pages that drive the most traffic and conversions. These pages have the biggest impact on your business when optimized.

- Homepage (your highest traffic page)

- Top 3-5 product pages by traffic

- Checkout process

- Category pages

Step-by-Step A/B Testing Guide

Follow this process to run your first A/B test. We’re covering an example use case to illustrate the steps—adapt the goals and metrics to fit your own test.Identify the Problem

Review your Google Analytics dashboard and look for:

- High-traffic pages with low conversion rates

- Pages with high bounce rates

- Checkout abandonment points

Add Heatmap Tracking

Install Hotjar or Microsoft Clarity to see live user interactions with your site. Replo integrates directly with both tools, so you can get up-to-date heatmap tracking on any of your Replo pages.Take note of:

- Where users click

- How far they scroll

- What they ignore

Write Your Hypothesis

Make sure your impact is measurable and quantifiable.We like this template: “Based on [data], we believe that [change] will [impact] because [reasoning].”Example hypothesis: “Based on heatmap data showing 70% of mobile users never scrolling below the fold, we believe moving the Add to Cart button above the fold will increase add-to-cart rates by at least 15% because users won’t have to search for the primary conversion action.”

Calculate Sample Size

Use a statistical significance calculator to determine the sample size you’ll need for your test results to have non-random differences.You’ll need to know the following:

- Current conversion rate

- Relative minimum detectable effect (usually set to 15% for a beginner A/B test)

- Confidence level (usually set to 95% for a beginner A/B test)

- Statistical power (usually set to 80% for a beginner A/B test)

- Current conversion rate: 2%

- Relative minimum detectable effect: 15%

- Confidence level: 95%

- Statistical power: 80%

Create Your Page Variation

In your page building platform of choice, make the necessary edits. Keep everything else the same.For this example, that means moving the “Add to cart” button to be above the fold.In Replo, you can do this without any manual edits. Simply prompt Replo by typing “move the add to cart button above the fold” and hitting enter, and Replo will do it for you.For more complex edits (not needed for a beginner A/B test, but necessary for more advanced tests), you can give Replo a list of edits to work through, and it can execute on all of them at once.Once you’re done, name the page clearly so you know if this is the variant or the control.

Set Up Your A/B Testing Platform

There are many A/B testing platforms to choose from.For the most streamlined A/B testing process that comes built-in with our campaign builder, use Replo. Create and edit page variations, and set up and run your experiments—all in the same app.With Replo, you can create forever links that are reused across multiple (non-simultaneous) paid ad campaigns, such as on Meta, so you don’t need to retrain your ad algorithms every time you run a new A/B test.Plus, you can test pages built in any platform of your choice, not just on Replo. All you have to do is create your Replo forever link which drives traffic to the A/B test, and then copy and paste in the URL of your original page and your variant page.In the case of this example, set your primary goal as landing page conversion rate.

Q/A Your Experiment

Before you launch your A/B test, visit the experiment link across a number of browsers and devices. Test all links and buttons (especially the one that you are tracking!).Items to Q/A include:

- Chrome, Safari, Firefox

- Desktop, mobile, tablet

- Different screen sizes

- Verify tracking fires correctly

Launch Your Experiment

Here’s a quick rundown of things to do and not to do:

- Set test to run for minimum 4 weeks (2 full business cycles)

- Don’t stop early even if you see significance

- Document start date and expected end date for recordkeeping

Monitor The Test (But Don't Interfere)

Check your A/B test weekly for:

- Technical issues. Ex: broken pages, 404 links, tracking problems

- Traffic distribution. This should stay close to an even 50/50 split.

Check Statistical Validity

Before analyzing your test results, verify that the following is true:

- Sample size reached: Did each variation get your calculated minimum visitors?

- Test duration met: Ran for at least 2 weeks spanning complete business cycles?

- Statistical significance achieved: Confidence level ≥95%?

- No external factors: No major sales, seasonal marketing campaigns, or technical issues during the test?

Analyze Your A/B Test Results

Given the set-up of our experiment, there are three possible outcomes:

- There is a clear winner between results (this is the most common)

- There is no significant difference between the control version and the test version

- There is a clear losing version

- Device type (mobile vs. desktop)

- New vs. returning visitors

- Traffic source (organic, paid, direct)

- Geographic location